Self-Distilled StyleGAN: Towards Generation from Internet Photos

| | Paper | Video | Supplementary Material | Datasets and Models | |

Abstract

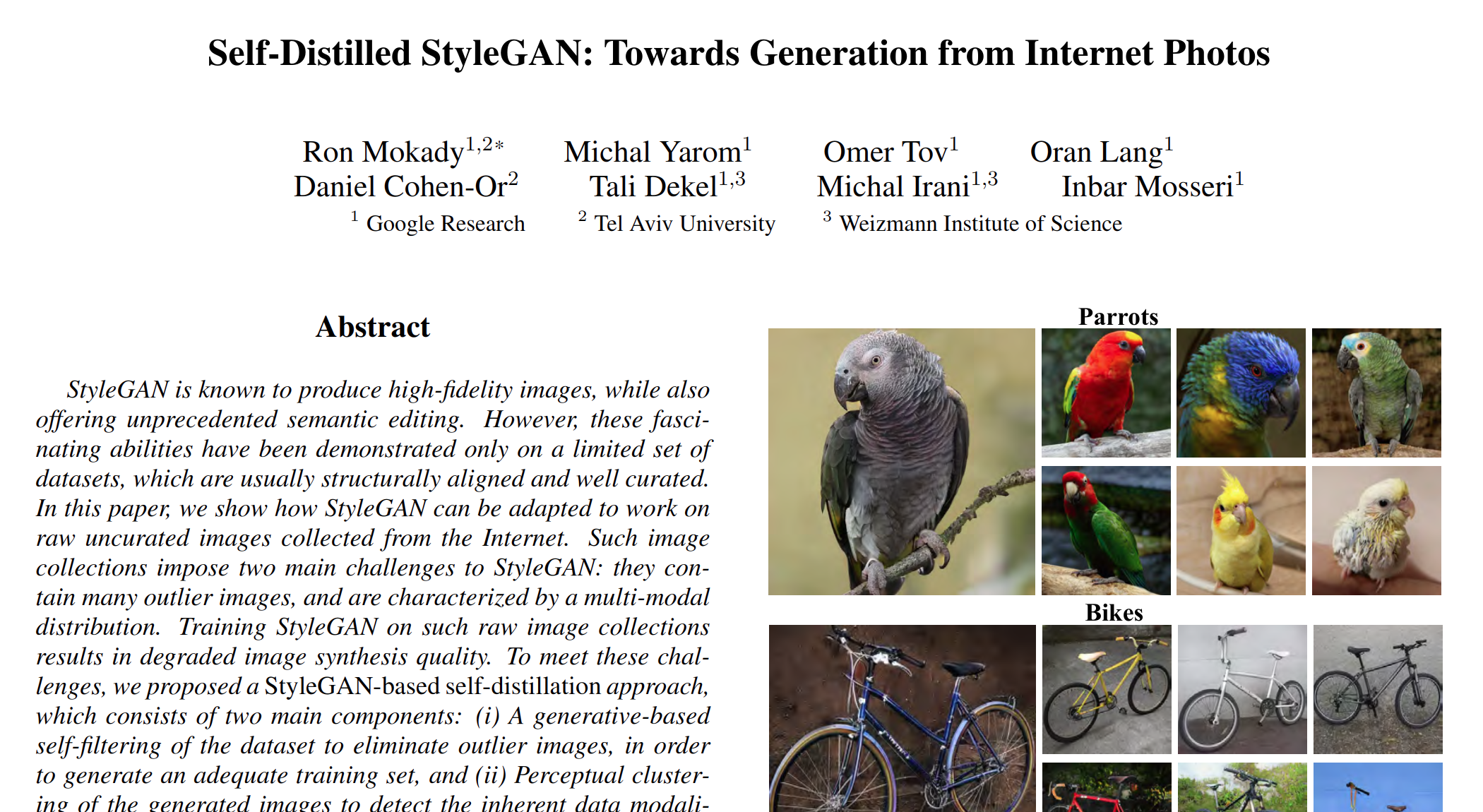

StyleGAN is known to produce high-fidelity images, while also offering unprecedented semantic editing. However, these fascinating abilities have been demonstrated only on a limited set of datasets, which are usually structurally aligned and well curated. In this paper, we show how StyleGAN can be adapted to work on raw uncurated images collected from the Internet. Such image collections impose two main challenges to StyleGAN: they contain many outlier images, and are characterized by a multi-modal distribution. Training StyleGAN on such raw image collections results in degraded image synthesis quality. To meet these challenges, we proposed a StyleGAN-based self-distillation approach, which consists of two main components: (i) A generative-based self-filtering of the dataset to eliminate outlier images, in order to generate an adequate training set, and (ii) Perceptual clustering of the generated images to detect the inherent data modalities, which are then employed to improve StyleGAN's "truncation trick" in the image synthesis process. The presented technique enables the generation of high-quality images, while minimizing the loss in diversity of the data. Through qualitative and quantitative evaluation, we demonstrate the power of our approach to new challenging and diverse domains collected from the Internet.

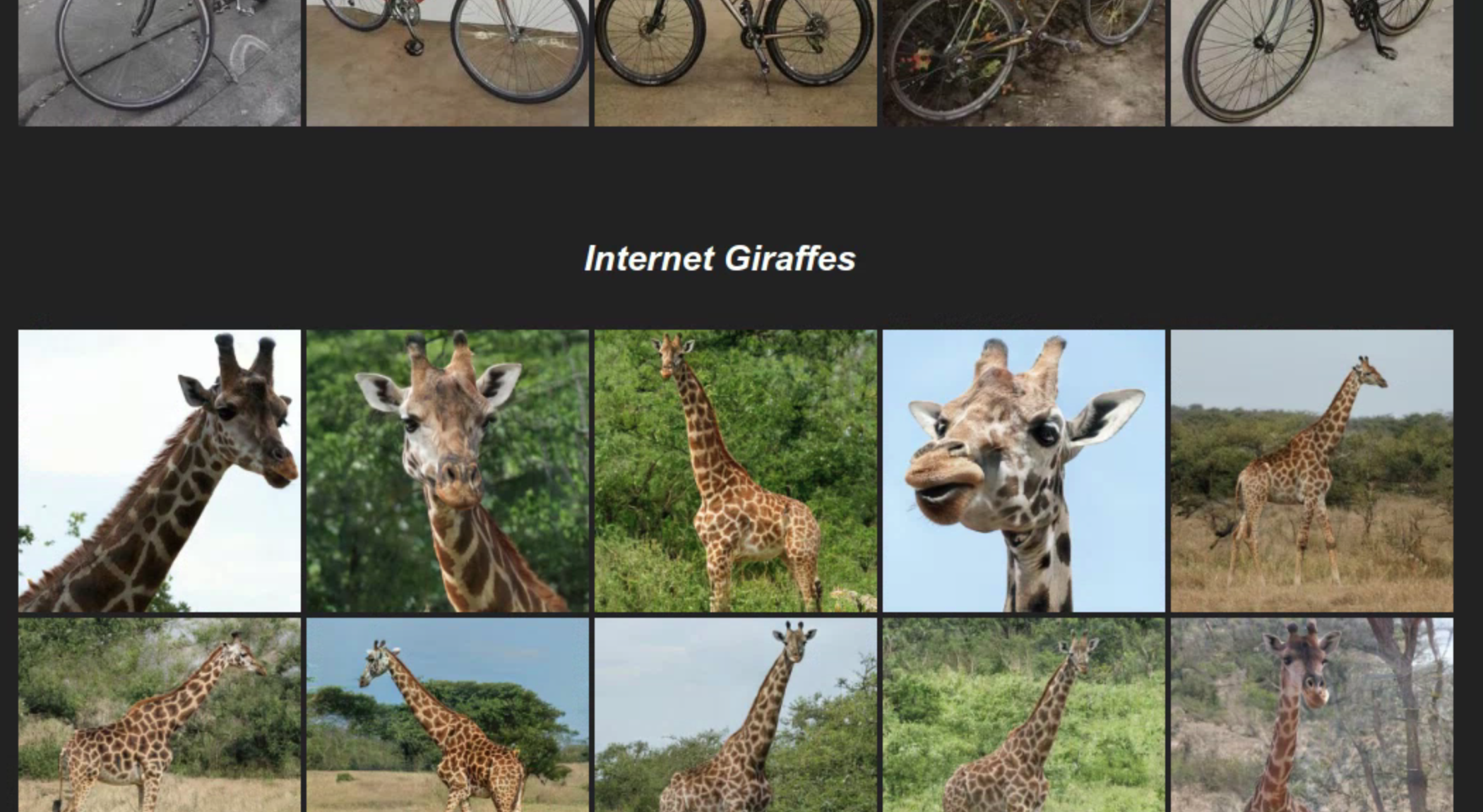

Image Generation Results for a Variety of Domains

Our Self-Distilled StyleGAN framework enables high-quality and diverse image generation from uncurated Internet datasets.

|

|

|

|

|

|---|---|---|---|---|

|

|

|

||

|

|

|

|

|

|---|---|---|---|---|

|

|

|

||

|

|

|

|

|

|---|---|---|---|---|

|

|

|

||

|

|

|

|

|

|---|---|---|---|---|

|

|

|

||

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|---|

|

|

|

|

|

|---|

Cluster Center Visualization

Raw uncurated images collected from the internet tend to be rich and diverse, consisting of multiple modalities, which constitute different geometry and texture characteristics. To maintain the diversity of the generated images while improving their visual quality, we introduce a

Here we show random walks between our cluster centers in the latent space of various domains. As can be seen, the cluster centers are highly diverse and captures well the multi-modal nature of the data.

| Lions | Dogs | Parrots | Potted Plant |

|---|---|---|---|

Semantic Editing Examples

Our approach retains StyleGAN's remarkable semantic editing capabilities, enabling us to perform various editing to each domain.Lions | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

Roar

|

Add / Remove Mane

|

Head Pane Rotation

|

|||||||||

Parrots

|

Horses

|

||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Paper

|

|

Results / Supplementary Material

|

[Link] |

Datasets and Models

|

[Datasets&Code] |

Bibtex

| @misc{mokady2022selfdistilled, title={Self-Distilled StyleGAN: Towards Generation from Internet Photos}, author={Ron Mokady and Michal Yarom and Omer Tov and Oran Lang and Daniel Cohen-Or, Tali Dekel, Michal Irani and Inbar Mosseri}, year={2022}, eprint={2202.12211}, archivePrefix={arXiv}, primaryClass={cs.CV} } |

Last updated: Apr 2021